Abundance: Trust

In our last post Defining Abundance, we discussed:

- How abundance is created through abstraction. (Image: Black Box.)

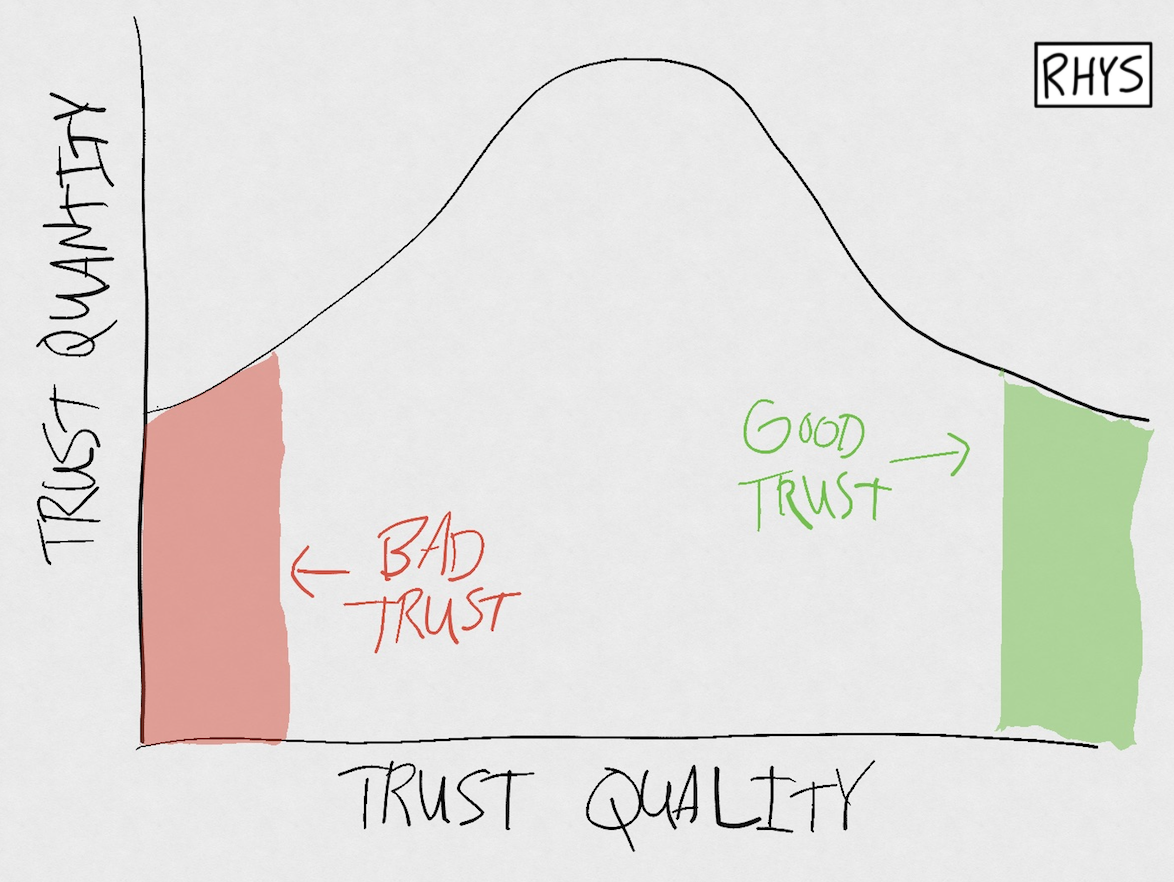

- How an increase in quantity leads to an increase in quality, which we called "variable-quality abundance". (Image: Raised graph with good and bad sides.)

- How individuals and systems can respond to variable-quality abundance. (Image: Shift the graph right and have individuals search for good stuff.)

Last time, we looked at abstracting food, goods, and information. Today, we'll look at abstracting trust. We'll:

I. Define trust

II. Explore the shift from centralized to decentralized trust

III. Understand how to create decentralized trust

IV. Look at how abundant trust leads to both good and bad trust

I. Defining Trust

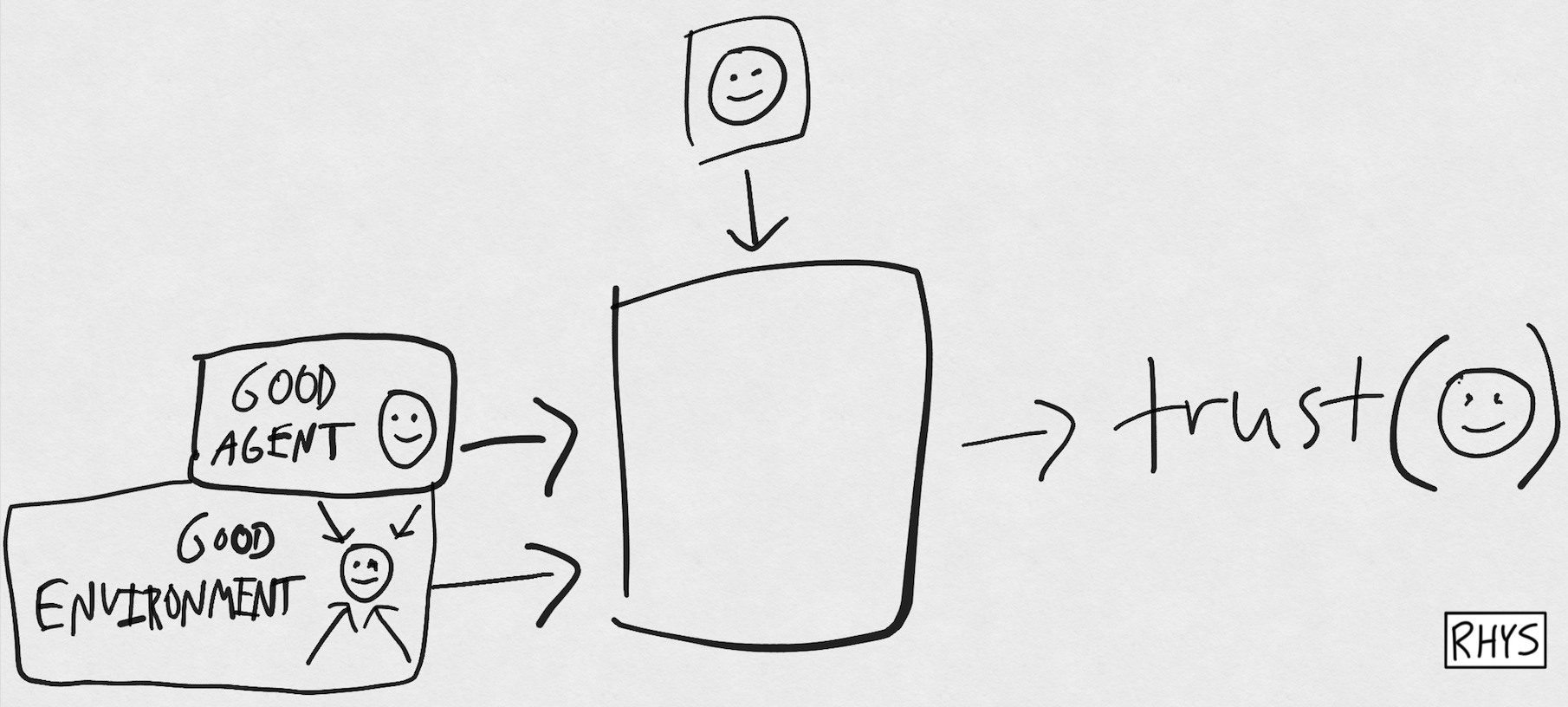

From last time, we learned about the process of abstraction: It increases the supply of a resource by commoditizing the creation process of that resource. It's the black box:

We know what the output will be—trust. But what are the inputs? And what is in the black box?

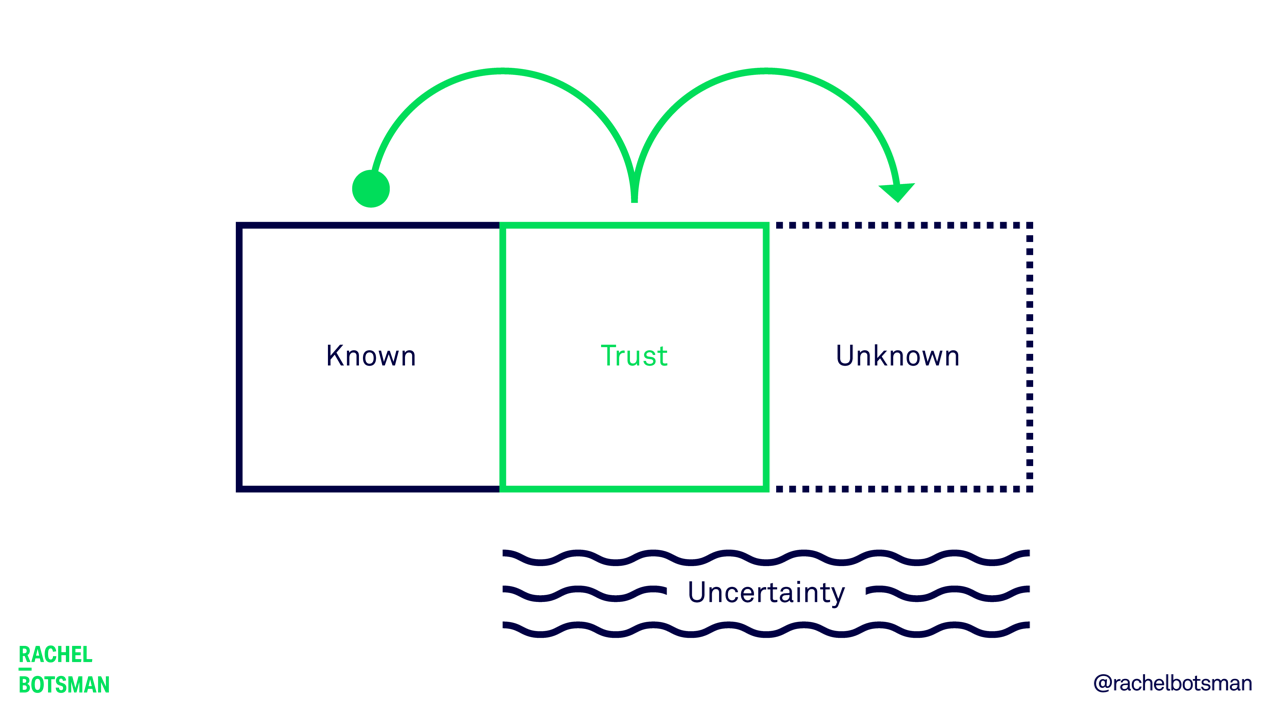

In order to answer that, we first need to understand—what is trust? I'll use Rachel Botsman's definition: Trust is a confident relationship with the unknown.

Trust is a "meta-resource". It doesn't directly meet needs itself, but lowers the friction for making decisions. In this way, trust differs from other resources like food or goods. Those are consumable—you can eat an apple. But you can't consume trust. Instead, trust meditates our relationship to the unknown. Want to eat chocolate, buy a shirt, or read that NYT article? Ask trust first.

Trust is a function, not a resource. For a given object, we can ask—is it trusted?

trust(object) --> 0-10

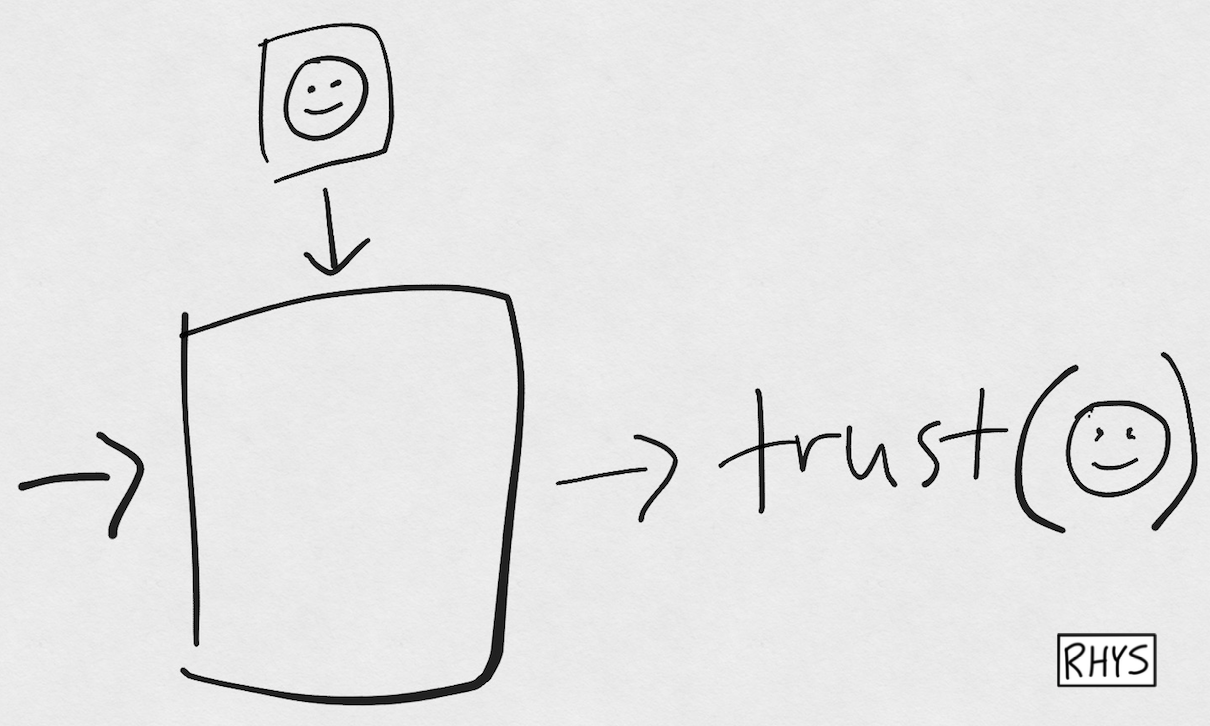

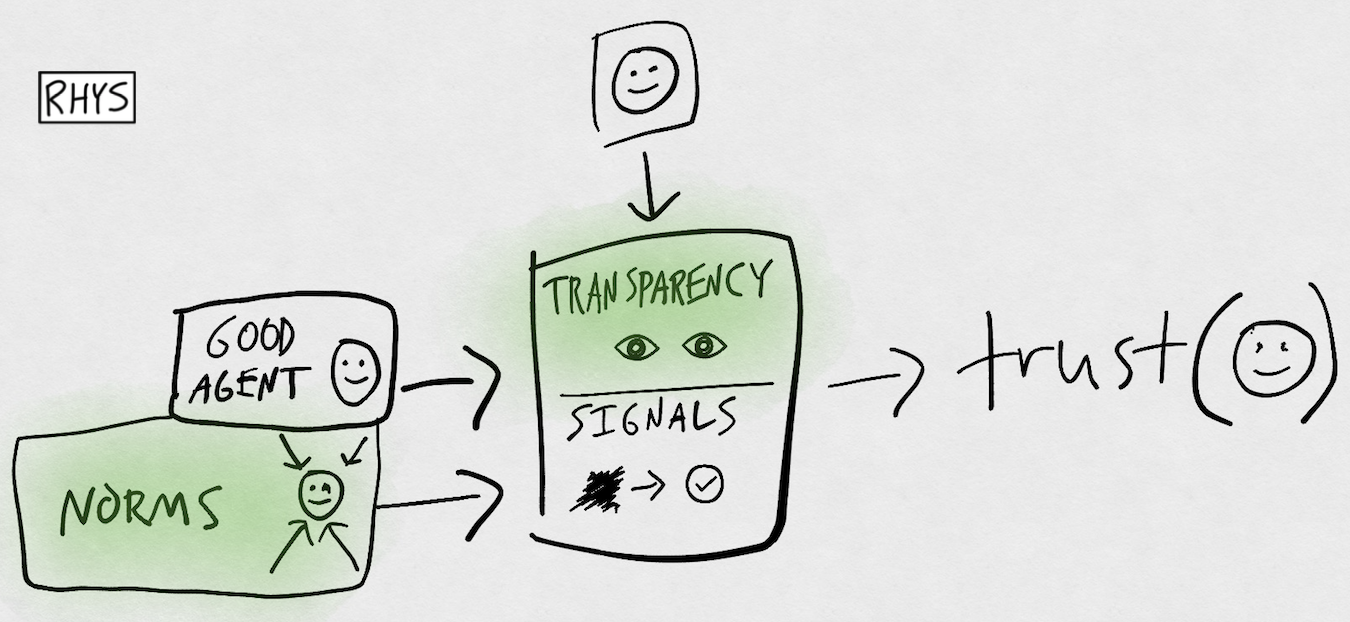

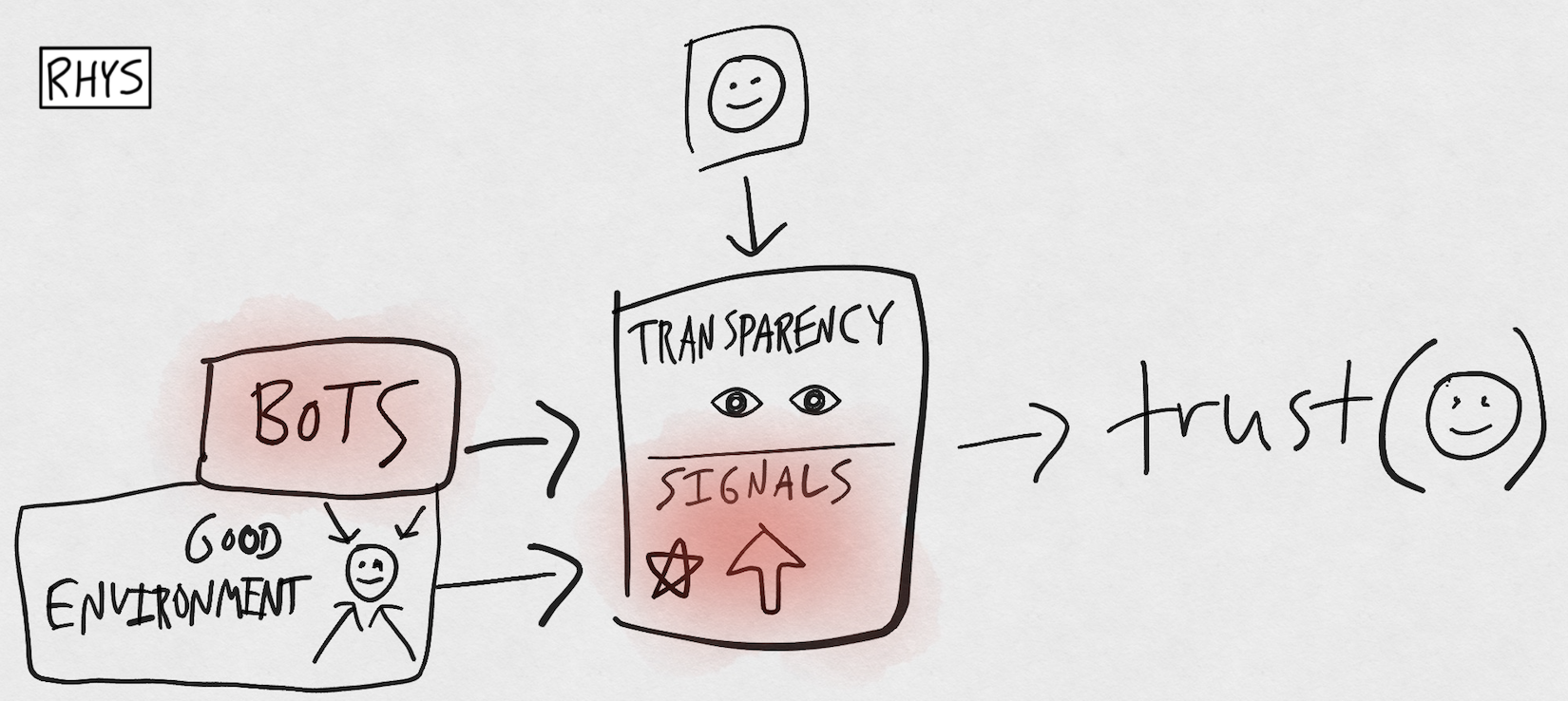

So actually, our black box should look like the one below. The abstraction is with respect to a given object (the 🙂 above the black box).

We're asking the question: can we trust this person? (Represented by 🙂)

We ask:

- Are they a good person?

- Is their environment helping them be good?

I like to think of #1 (are they good?) as—do we have aligned goals and values? These properties are internal to the person.

I like to think of #2 (is their environment good?) as—do we have aligned incentives? Are the parts of Lessig's dot helping them be good? Are they praised for good behavior, punished by the law for bad behavior, paid $$ for helping others, and positively constrained by code?

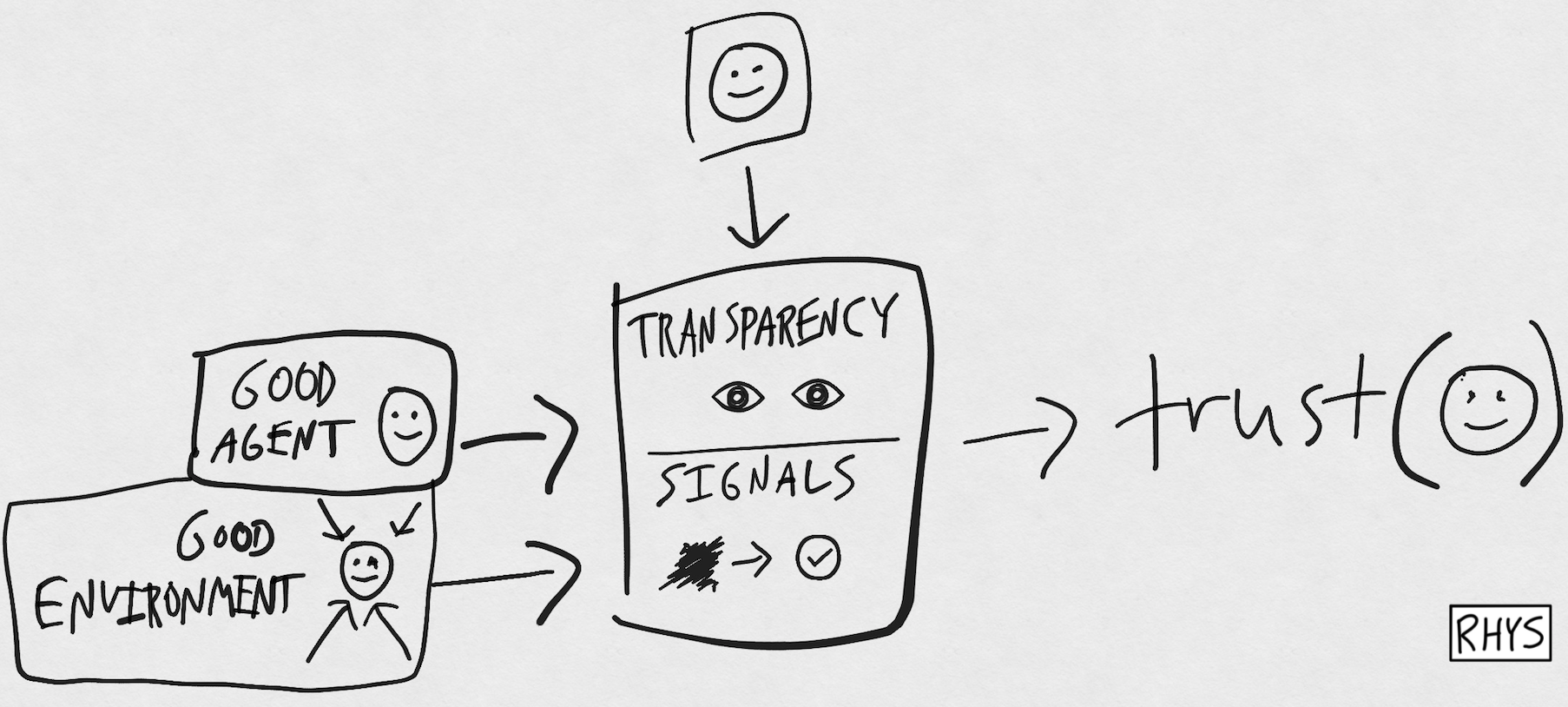

But how do we know if the person is good? Or if their environment is good? We can either directly see the true value through transparency, or can get signals of their goodness.

I like to think of transparency as making the unknown known. Trust is a confident relationship to the unknown. So we can increase trust by turning the unknown into the known. Then we don't "need" the trust as much.

But we don't always have full transparency. In that case, we can get signals from our network to determine trust. If lots of experts trust them and tons of normal people do too, then we should probably trust them!

Under the model above, who is the most trusted? A clone of yourself. You think you're "good" and you have an environment of good incentives. Plus, you have full transparency, so you don't even need signals. You trust your clone.

Who is the least trusted? Imagine an unknown, evil, infamous person. You know nothing about them except they have misaligned goals/values, misaligned incentives, and everyone says they're evil. Don't trust them.

In order to abstract trust, we can focus on these four categories (represented above):

- Making "good" people

- Putting them in a "good" environment

- Checking their goodness through full transparency

- Or checking goodness through signals

Now, let's look at how centralized institutions abstract trust differently than decentralized networks.

II. From Centralized to Decentralized Trust

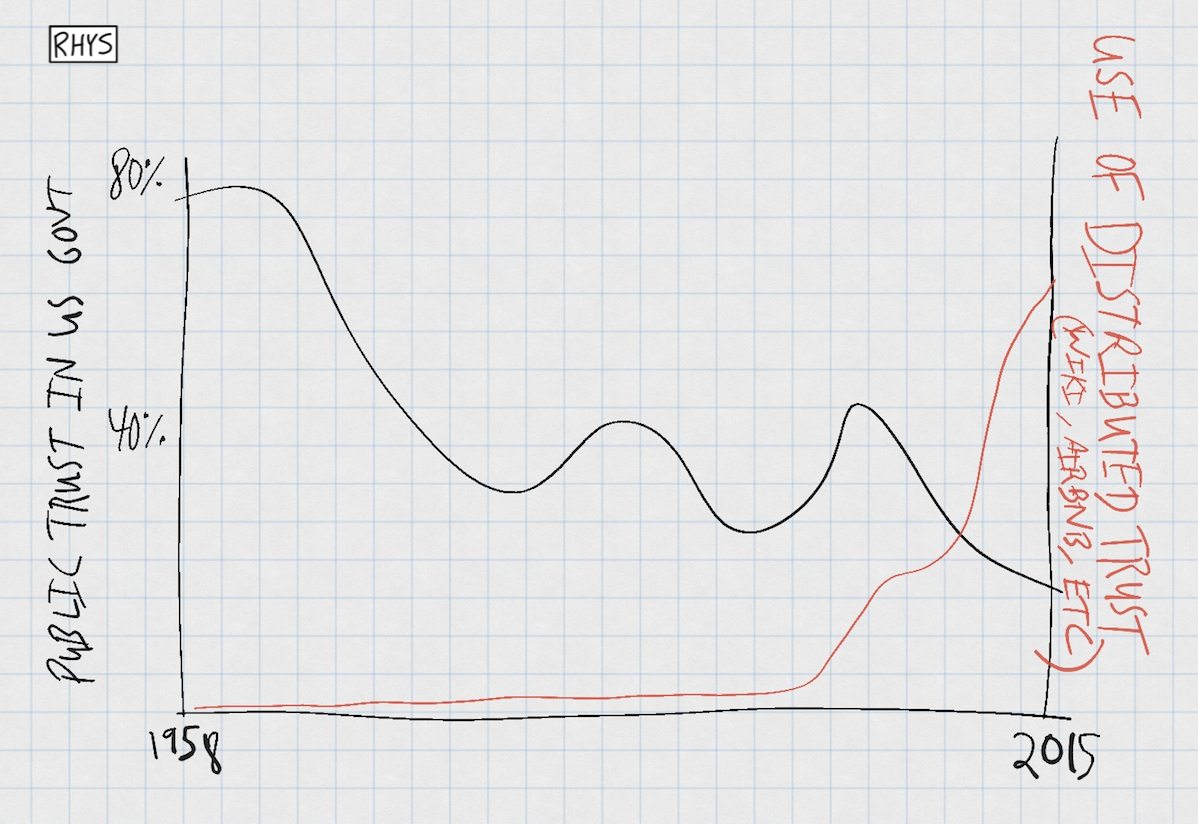

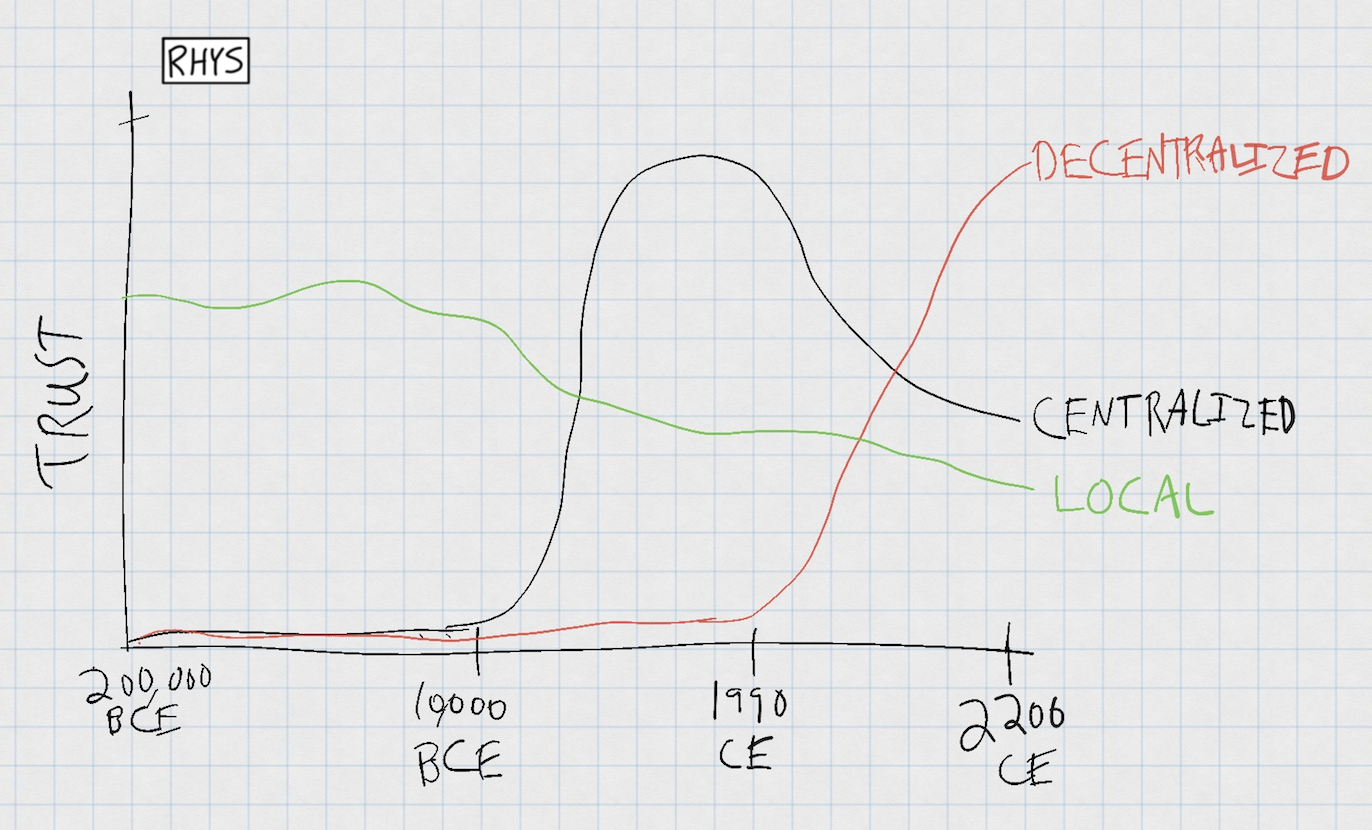

As you can see in the graph below, our trusted in distributed networks (red) has increased as our trust in centralized institutions has decreased (black).

The black line is trust in the US government (source), which has been steadily decreasing since the end of WWII. The red line is "use of distributed trust": things like Wikipedia, AirBnB, etc. There's no exact way to measure distributed trust, but I roughly created a line that showed our increasing use of distributed trust.

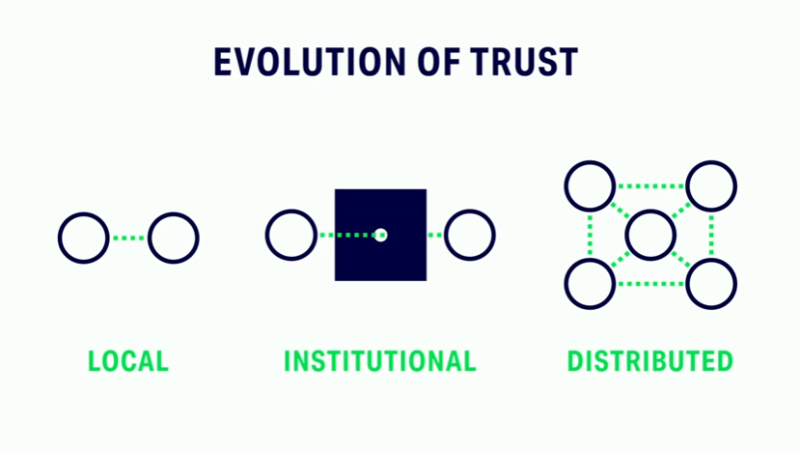

Before centralized trust, we had another type of trust—local trust. This the kind of trust we had in hunter-gather societies.

So if we zoom out from the graph above and look at the full history of homo sapiens, we get this:

- We primarily relied on local trust until the Agricultural Revolution (10,000 BCE).

- Then we created centralized, institutional trust with laws and religion.

- Only after creating the internet have we created decentralized trust with code.

In the next section, let's look at how trust is created:

- Locally face-to-face

- By centralized institutions with laws and religion

- By distributed networks with code

III. How to Create Local, Centralized, or Distributed Trust

How To Create Local Trust

In a hunter-gatherer environment where you know everyone around you, trust is created through norms and through transparency—everyone knows you.

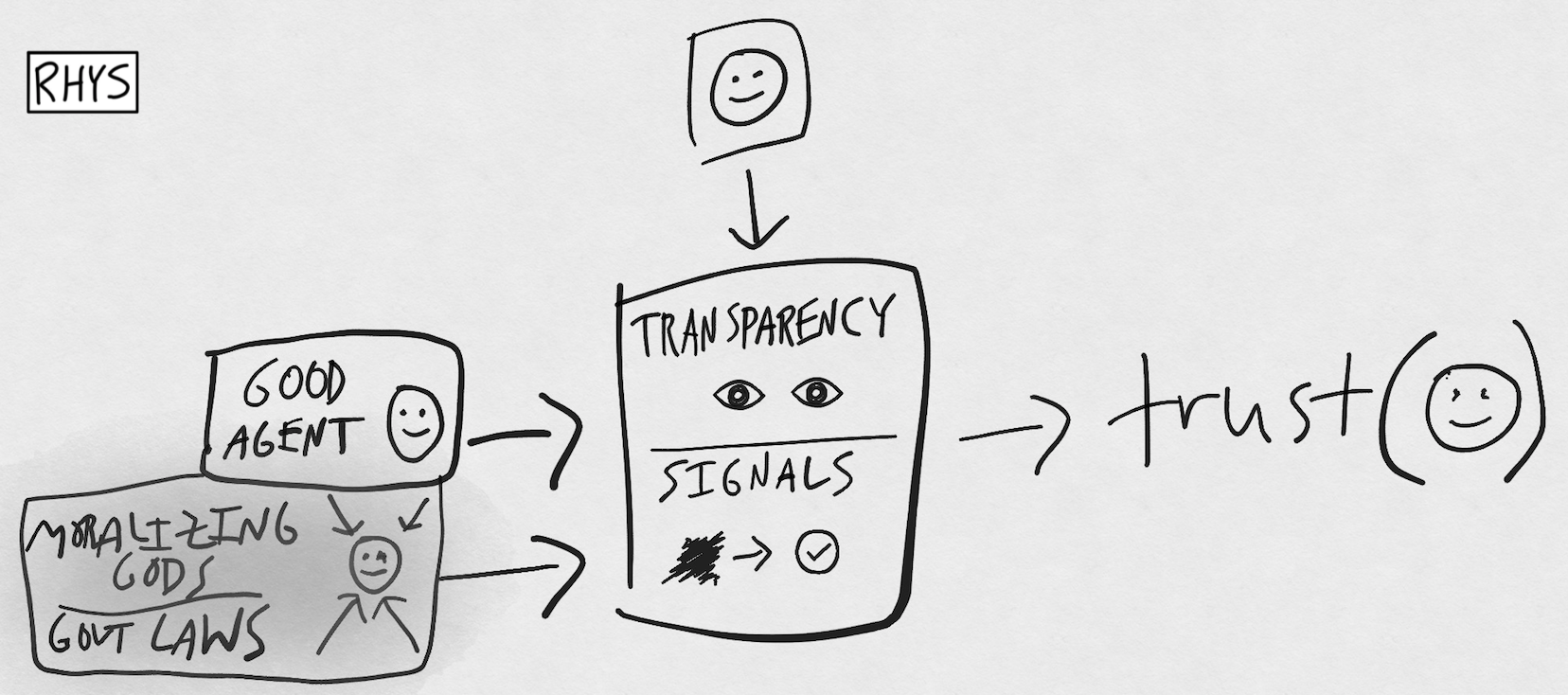

How Centralized Institutions Create Trust

Centralized institutions created trust through:

- Moralizing gods. God said "if you are bad, you will go to hell." This accountability system was crucial for expanding the trust circles to strangers in larger cities.

- Government laws. The government said "if you are bad, we will put you in jail." This accountability system allowed people to expand their trust circles and enter in contracts with strangers.

- Markets and money. The market said "if you are bad, you won't make as much money." The accountability system allowed people to enter the double thank you of capitalism and engage in trade with strangers.

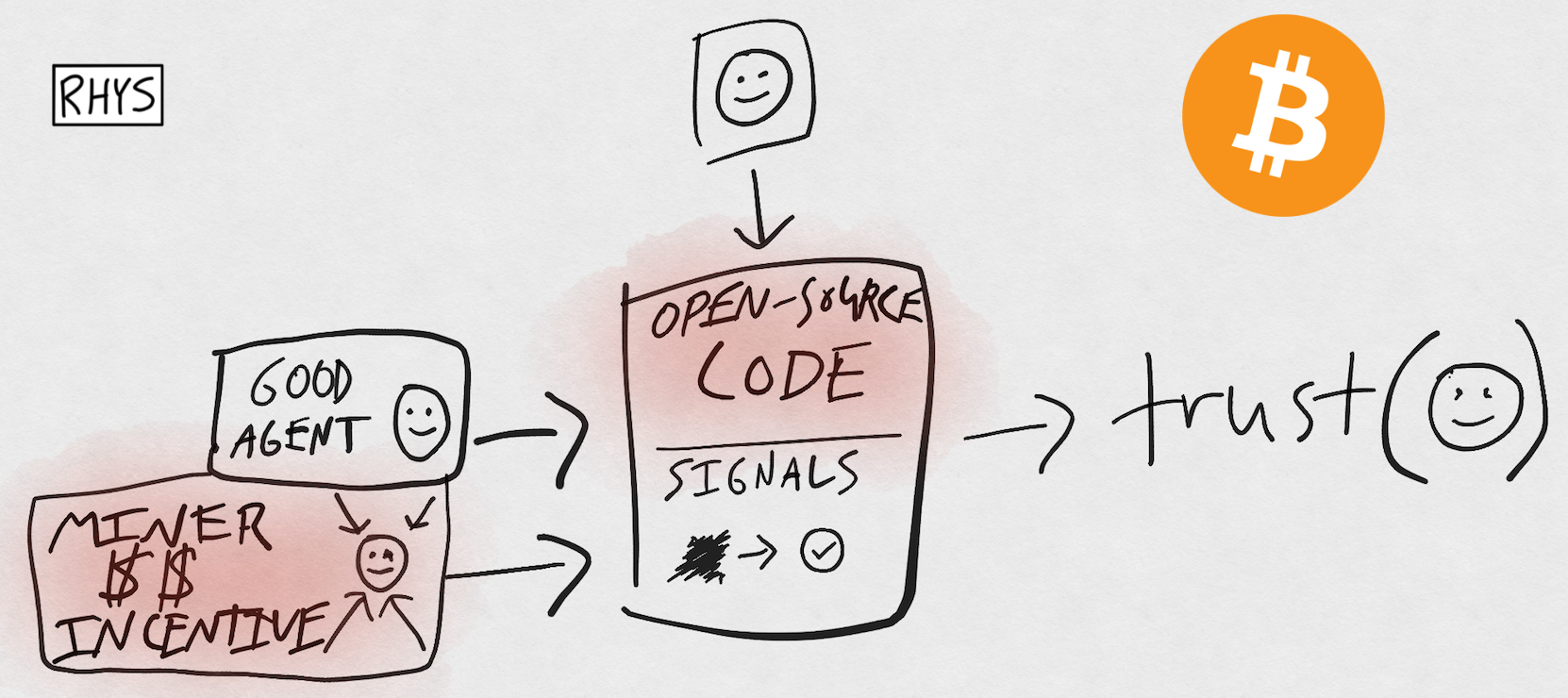

How Distributed Networks Create Trust

Example #1: Bitcoin

Distributed networks leverage code to create trust. Let's look at Bitcoin as an example.

Bitcoin increases transparency (turns unknown into known, decreasing the need for trust) through their open-source repository.

Also, Bitcoin uses financial incentives to ensure trust with miners. The protocol doesn't just nicely ask miners to order transactions. Instead, it rewards miners who do it correctly, and punishes miners who do it incorrectly.

For more on this, see Chris Dixon's announcement of a16z crypto:

Blockchain computers are new types of computers where the unique capability is trust between users, developers, and the platform itself. This trust emerges from the mathematical and game-theoretic properties of the system, without depending on the trustworthiness of individual network participants.

Trust is a new software primitive from which other components can be constructed. The first and most prominent example is digital money, made famous by Bitcoin. But, as we’ve discovered over the past few years, many other software components can be constructed using the building blocks of trust.

Example #2: The Internet Research Agency

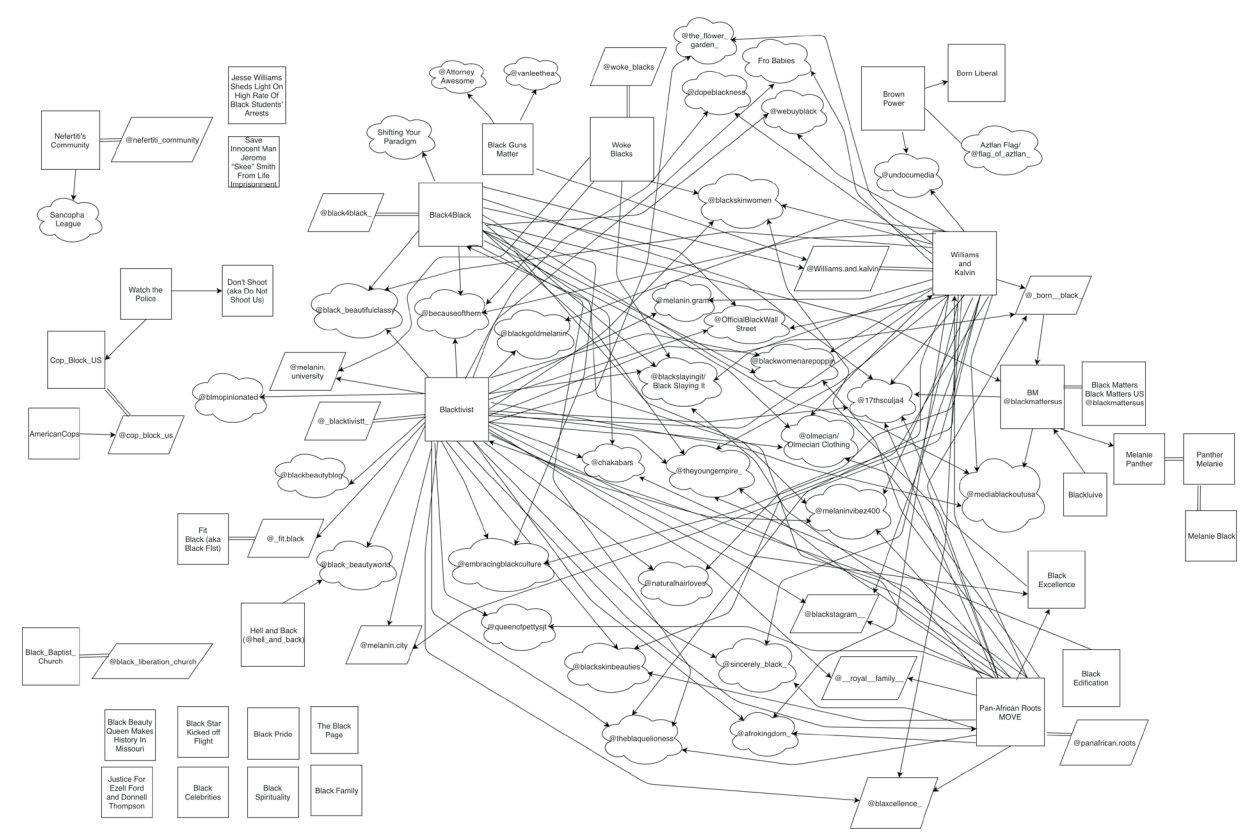

As another example, let's look at how the Internet Research Agency (IRA) exploited distributed trust signals. They used bots and fake signals to create "fake trust".

Some background: The IRA is a Russian company who used social media to influence the 2016 US election. With an annual budget of over $10M, they reached 126M people on Facebook (and millions more on other platforms).

What was their main tactic? Build trust with an online group, then leverage that trust to create disunity. They created "fake trust" on both the left and the right. For example, here are all of their interconnected social media properties that targeted black Americans:

In these groups, the IRA would create content like this:

And over time, they built more and more trust in these fake groups using the signals:

- "Hey look, we have the same identity! We have shared goals and values."

- "And look at all of these people that follow us!"

By exploiting our new code-based signals for distributed trust, the IRA was able to generate new (packets of) trust, and then leverage it to their own (bad) ends.

Example #3: Trump

Trump has strong distributed trust signals. He has lots of followers:

And he's followed by people that I follow:

Plus, he has great Amazon ratings for his books:

This contrasts with Trump's lack of success in centralized trust signals. Newspapers, the Republican Party, and most elite institutions didn't like Trump. But it didn't matter. Trump was perfect for a distributed trust ecosystem. He "tells it like it is" and the people like that.

Trump was an "accidental" exploit of distributed trust. Trump just kept being himself on the internet and eventually he won.

This leads directly to our final point—abstracting trust makes it abundant, but also means that you get "good" trust and "bad" trust.

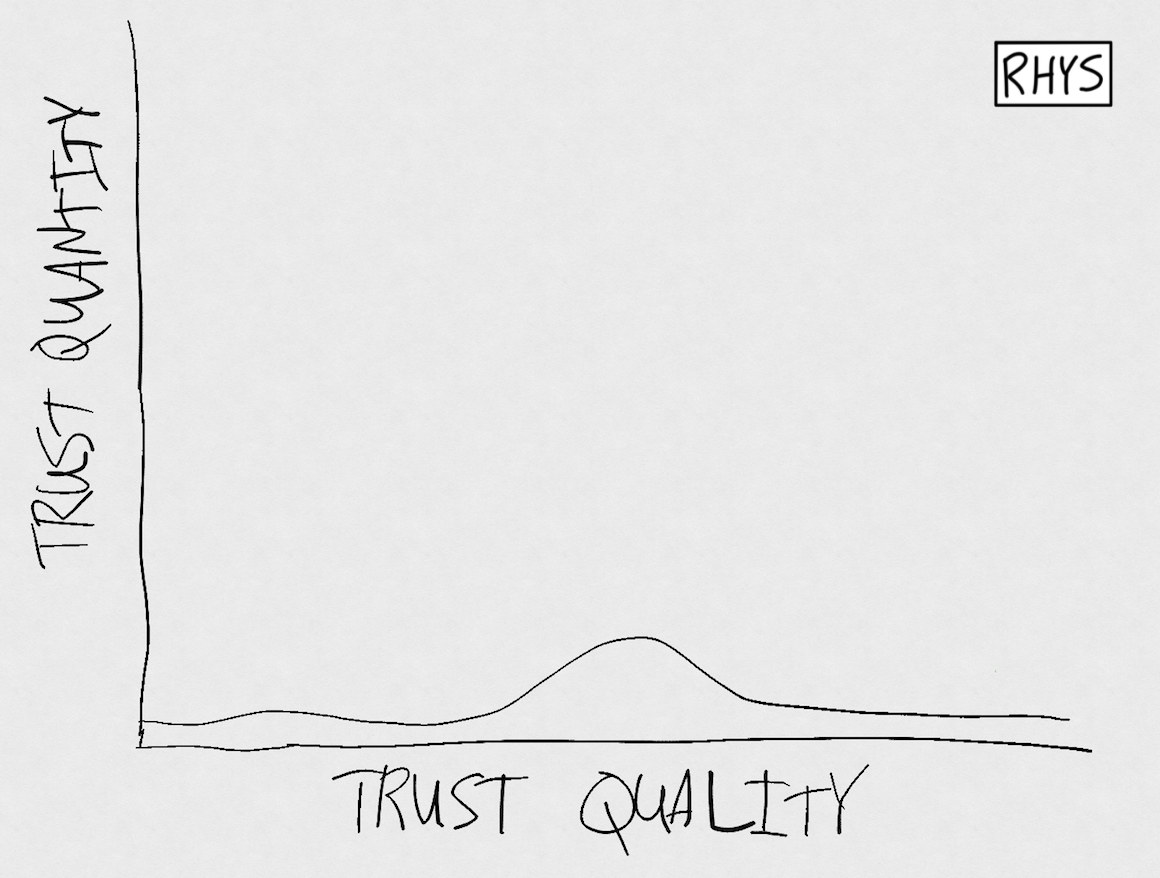

IV. How Abstraction of Trust Leads to Variable-Quality Abundance

In Defining Abundance, we looked at how abundance leads to heterogeneity in quality. Like with information, we used to have less trust. (i.e. When it was only produced locally, or by centralized institutions.)

But now that we've commoditized/abstracted trust (with code), we are starting to see an abundance of it. Some of this trust is good, some is bad.

Conclusion

As a summary:

I. Trust is a confident relationship with the unknown.

II. Society is shifting from centralized to decentralized trust.

III. We've abstracted trust (through code) to create decentralized trust in networks.

IV. This newly abundant trust can be good or bad. It is variable-quality abundance.

Thanks for reading. As always, I'm happy to answer any feedback or questions.

Next time, we'll explore the abstraction of money.

Notes:

Centralized Institutions

- So many graphs show institutionalized trust decreasing but none show distributed trust increasing? Why not? How would we measure it? (# of total transactions is a pretty good metric. If the world is doing more transactions, that likely means there's more trust.)

- We're used to punishment from centralized institutions (law/police from govt., hell from religion). These moralistic god / high-modernist punishments were crucial for cultural evolution and for increasing trust across strangers. Question: what is the networked version of this? How can networks create moral capital (to use Haidt's term) in a distributed way?

- I don't know enough about how centralized institutions create trust. Contracts, laws, police, standards, moralizing gods. What else?

- How does decentralized trust relate to centralized? Is distributed trust creating competitive pressures on centralized trust? Does this mean that centralized trust is getting better at their wheelhouse? e.g. Creating strong brands?

- "Bad" trust also happened with centralized trust. For example, this is a quote from The Doctrine of Fascism, by Benito Mussolini. [Our movement rejects the view of man] as an individual, standing by himself, self-centered, subject to natural law, which instinctively urges him toward a life of selfish momentary pleasure; it sees not only the individual but the nation and the country; individuals and generations bound together by a moral law, with common traditions and a mission which, suppressing the instinct for life closed in a brief circle of pleasure, builds up a higher life, founded on duty, a life free from the limitations of time and space, in which the individual, by self-sacrifice, the renunciation of self-interest … can achieve that purely spiritual existence in which his value as a man consists.

- One note on the Nature article I linked earlier: Complex societies precede moralizing gods throughout world history. It was commonly thought that gods created "prosocial supernatural punishment" that allowed trust (and therefore complexity) to exist. But actually, they co-evolved with each other. (Classic.) There was a standardizing of religious traditions that ~proceeded complex societies. Then came the gods. "Moralizing gods are not a prerequisite for the evolution of social complexity, but they may help to sustain and expand complex multi-ethnic empires after they have become established."

Representing/Defining Trust

- I don't know the best way to categorize the fact that trust is context-dependent. Trust X to do Y in Z context. Not just trust X. I think the most important type of trust is "trust to cooperate". That's what we need most.

- I'm not sure the best way to visually represent the differences between trust as a primitive vs. food/goods/info as a primitive.

- Related: I'm not that interested in transactional trust. (How to get people to buy things.) I'm more interesting in trust like—how can we get people to trust adding money to shared pools? (Giving to others.)

- What is the relationship between trust and risk?

- You can break "good person" down into "aligned goals" and "aligned values". Values often show up as community moderation standards. For example, r/ChangeMyView has rules like—don't be rude, don't accuse in bad faith.

- On bad vs. good trust: It's worth differentiating between trust that is leveraged for bad ends (IRA) and trust that (at the object level) is weak/non-resilient to attack (also, IRA).

Other

- Astroturfing as an exploit of distributed trust

- "Strange familiarity" is the business term for making the known unknown in order to increase trust. (AirBnB is like a hotel!)

- Thus far, I've looked at abundance as zero-cost supply. But it's also zero-cost demand (when the cost of accessing something is ~0). For trust, this makes me think—how do we increase the demand for trust? Right now, it feels like people don't want to trust people from The Other Side. How can we help people want to trust others?

- Trust feels very connected to predictive processing (our brain's desire to decrease uncertainty). Also, Buster Benson's work on cognitive biases (overview, summary).

- Is the Chinese Social Credit System the best current example of Trust-as-a-Service? Maybe EigenTrust?

- What would a Proof-of-Trust look like? What would I need in order to just give someone money to use however they'd like?

- Is there a sousveillance version of the Chinese Social Credit System? Remember that the goal of that system is to create trust. We do want to create trust, just in a less authoritarian way.

- Trust is mediated by power. I fully trust giving money to any person in extreme poverty (Level 1). This is because: a) I mostly know what they're going to do with it—support basic needs and get to Level 2. b) They don't have much power. They won't be able to buy a nuke with it.

- I want to continue upgrading our signaling API to help with distributed trust. e.g. How can we get more people to use HTWWM manuals, and what should they look like? Beyond pronouns, what should we add?

- Is it possible to create less easily weaponizable trust?

- I didn't discuss reputation here, but should've :).

- Of course, there are many positive examples of distributed trust as well. (AirBnB is a personal favorite.)

Other Articles/Resources:

- In Alex Danco's emergent layers piece, he talks about the abstraction of trust from a helpful perspective: Machine-to-Machine Trust (Bitcoin), Machine-to-Person Trust (people trusting AI, e.g. chatbots), and Person-to-Person trust (Uber, AirBnB, marketplaces). I'd also add Person-to-Nature trust. Can we trust nature to "be good" to us? (In times of climate change, no.)

- https://www.airbnb.com/trust

- See the part of my book on trust here

- Cialdini's book "Influence" is highly connected to trust. The best way to get people to buy stuff (to get them to say yes) is to have them trust you. 6 factors here.

- Stanford's Distributed Trust Lab (mostly focused on cryptography and economics)

- https://en.wikipedia.org/wiki/Trust_(social_science)

- https://en.wikipedia.org/wiki/Trust_metric. eBay's Feedback Rating. Slashdot introduced its notion of karma.

- https://en.wikipedia.org/wiki/Web_of_trust was only introduced in 1992!

- The Changing Minds website has some amazing articles on trust. For example, on trust. Or, this, on swift trust, which was an interesting "pre-theory" of networked trust.

- For more on blockchain and trust, see Michael Casey's book The Truth Machine, Kevin Werbach's book Blockchain and the New Architecture of Trust