Information

I'm writing a book on What Information Wants. But nailing down a definition of information is hard. What is information?

Let's look at the Wikipedia definition. That should be helpful, right? (Right?!?)

1. Information can be thought of as the resolution of uncertainty that manifests itself as patterns.

2. The concept of information has different meanings in different contexts.

3. Thus the concept becomes synonymous to notions of constraint, communication, control, data, form, education, knowledge, meaning, understanding, mental stimuli, pattern, perception, proposition, representation, and entropy.

Woof. That doesn't help much.

Today we'll look at:

- How information theory defines information

- How science (physics, chemistry, biology) defines information

- How popular culture defines information

1. How Information Theory Defines Information

Claude Shannon is the father of information theory. Like all fathers of theories, he birthed his theory through a pdf, A Mathematical Theory of Communication (1948).

Shannon was trying to understand how messages like Morse code were created and transferred from one place to another. Questions like: How does the information of • • • (the letter "s") compare to the information of – – • • ("z")?

To answer this, Shannon proposed his definition of information:

Information is a measure of the surprise of a given message.

In the Morse code example above, "s" is less surprising than "z", so it contains less information.

Let's look at another example.

Imagine your grandmother is in the hospital and could die any day now. Depressing example, I know. ¯\_(ツ)_/¯

You receive a text from your dad. But your dad is a dad, so he isn't great at texting. He sends it to you one word at a time. It says:

- Hi

- Son.

- Your

- Grandma

- Is

- ...

Is WHAT?!? Dead or alive, Dad?!?

How much information is contained in this message? Not much. You still don't know if your grandma is dead or alive! All of his words were expected.

But then he sends you the final message:

- Alive.

Ah, now you received information! There was a 50/50 chance your grandma would be alive today. And now you know she is. Hooray. Go grandma.

However, 50/50 is probably giving her too much credit. It was more like a 1% chance she'd still be alive today. So seeing "alive" was actually more information.

A low probability event gives us more information. While a high probability event gives us less.

Seeing Morse code of • • • ("s") gives us less information than – – • • ("z") because s is more common than z.

How do we measure this information? Shannon invented a new fundamental unit of the universe. (You can do that?) He called it a "bit".

A bit of information cuts the probability space in half. This is why computer bits can be 0 or 1. There is a 50-50 chance of being either.

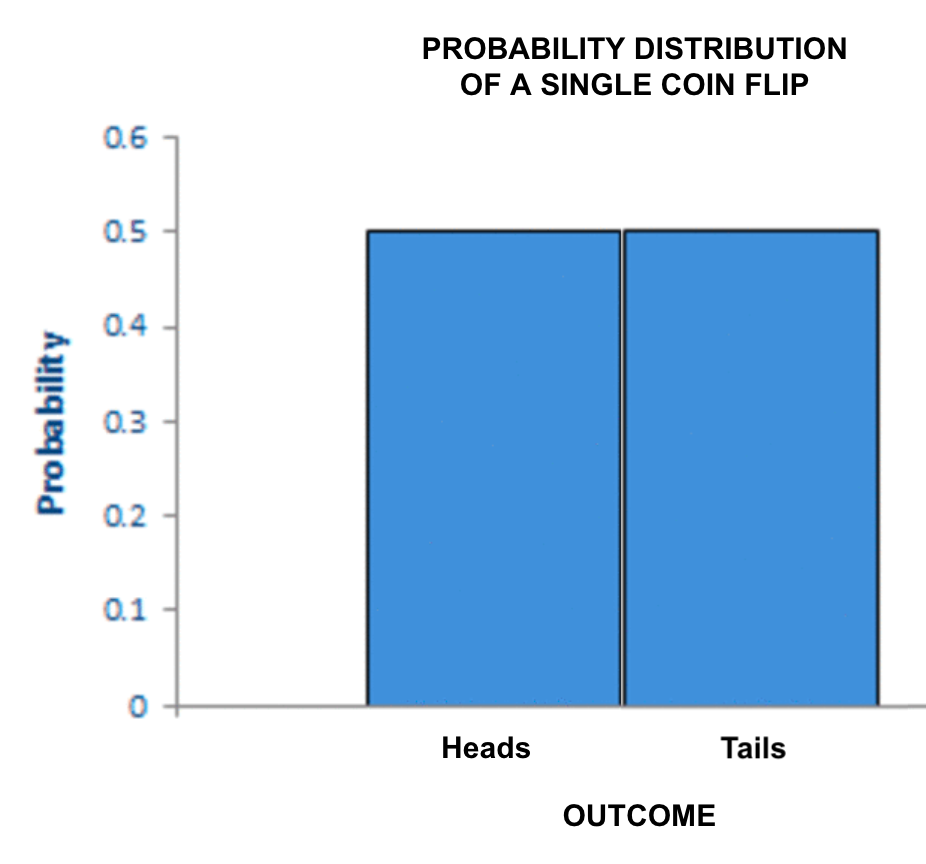

When you flip a coin and it turns up heads, you receive 1 bit of information.

Let's look at a more complicated example.

Solving Wordle Using Information Theory

We can apply this concept of "receiving information" and "cutting down the probability space" to solve guessing games like Wordle.

If you don't know how Wordle works, please try it here. I monetize this blog through NYT affiliate links 😉.

I'll be drawing heavily from ThreeBlueOneBrown's amazing video on "Solving Wordle using information theory" here:

ThreeBlueOneBrown's idea is that you can look at how good a guess is by seeing how much it reduces the possibility space—how much information you gain from each guess.

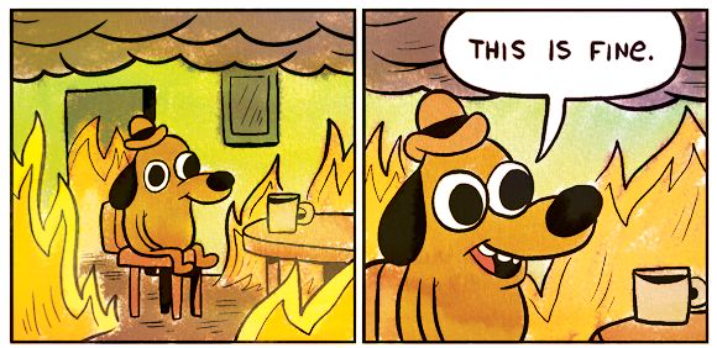

As an example, let's say the secret word is "myths" and we guess the word "scale". We learn that our secret word has an "s" but nothing else.

If roughly half of the words have s's, then that would give us 1 bit of information. It decreased the probability space in half.

But let's say we guess "yield" and learn that our secret word has a "y" instead. Y is much less likely to occur than S, so this information cuts down the possibility space more and we receive more information from this guess.

If we cut the space down by 1/4, that's two bits of info.

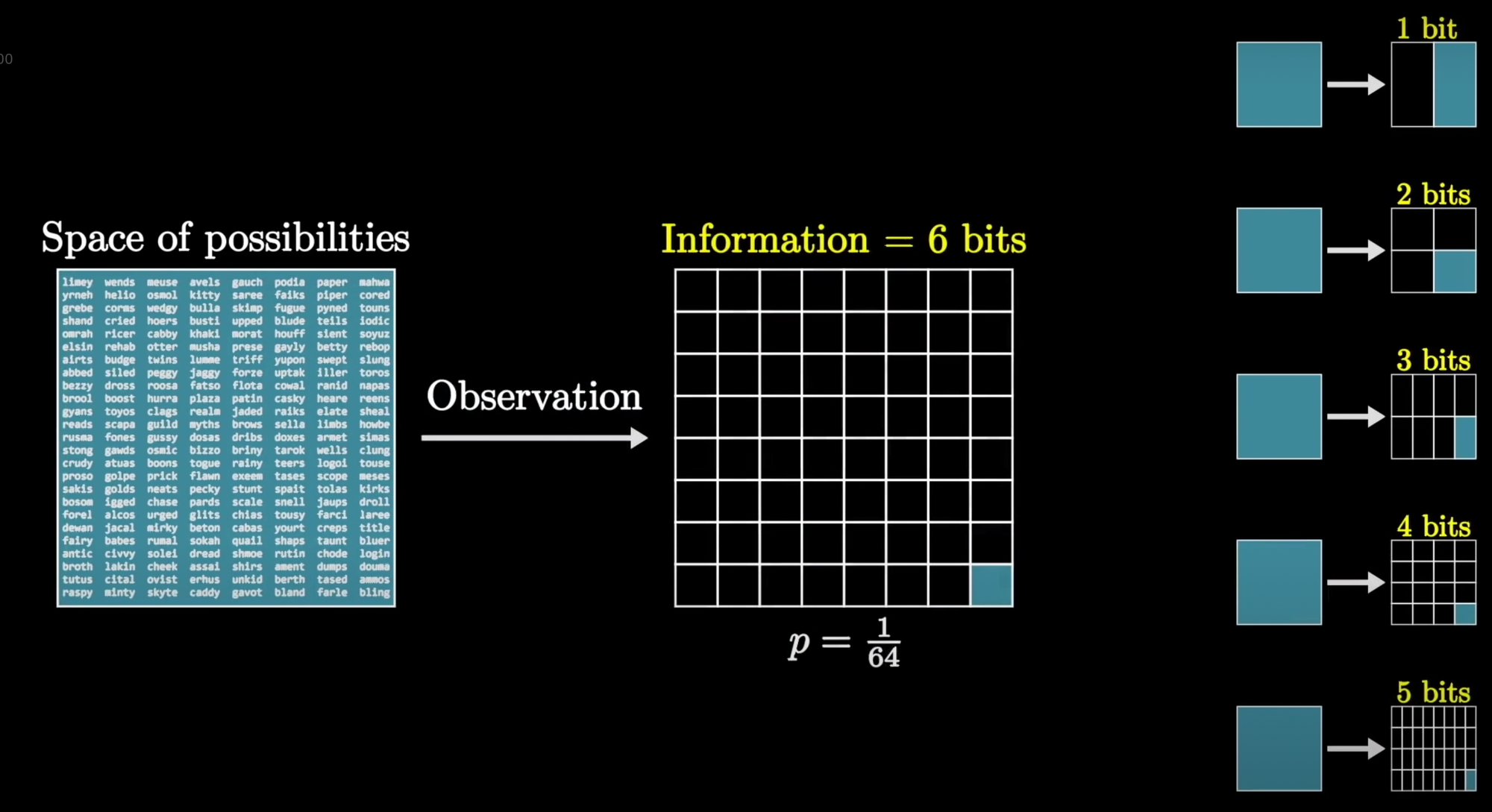

The image below shows how:

- 1/8 is 3 bits

- 1/16 is 4 bits

- 1/32 is 5 bits

- 1/64 is 6 bits

And so on.

So what's the mathematical definition of information?

We could start with something like:

Information = 1 / probability.

The amount of information we gain is the inverse of how probable that observation is.

- So if something is improbable, like learning our secret word has a "y", then we get more information.

- If something is probable, like learning our secret word has an "s", we gain less information.

This math definition is almost right. But actually we need to add a "log" because bits represent cutting the space in half over and over. It's an exponential relationship not a linear one.

The information in a 1/64 chance event is not 64 bits, but rather 6 bits (2^6 is 64).

So Shannon's equation for information is:

Information = log(1/p)

Entropy

One other annoying part of defining information is that information is always connected to a definition of entropy. But entropy is easy to understand, right? Let's look to trusty Wikipedia again:

1. Entropy is a scientific concept as well as a measurable physical property that is most commonly associated with a state of disorder, randomness, or uncertainty.

2. The term and the concept are used in diverse fields, from classical thermodynamics, to the microscopic description of nature in statistical physics, and to the principles of information theory.

3. It has found far-ranging applications in chemistry and physics, in biological systems and their relation to life, in cosmology, economics, sociology, weather science, climate change, and information systems including the transmission of information in telecommunication.

Oh no, not again!

Let's break it down. What is Shannon's definition of entropy?

Entropy is how much uncertainty is left.

As a first example, a single coin flip doesn't have much entropy. It'll either be heads or tails. We'd say there's only 1 bit of uncertainty left.

We can visualize this entropy as a probability distribution. There's a 50% chance we get a heads and a 50% chance we get a tails.

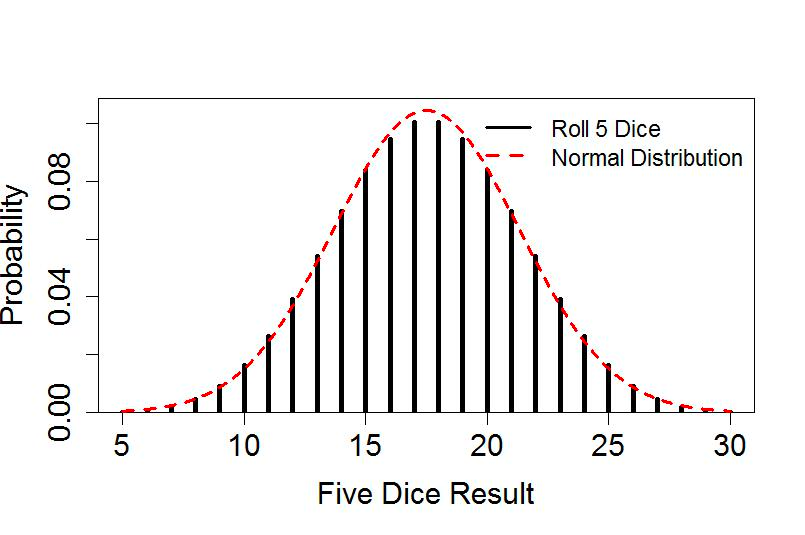

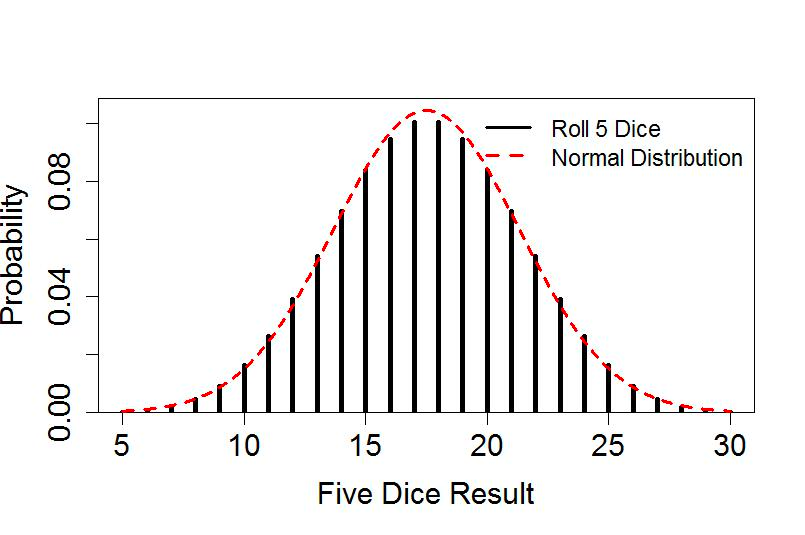

As a second example, we can look at the entropy of rolling five dice. This probability distribution is wider, which shows that there's lots of uncertainty left.

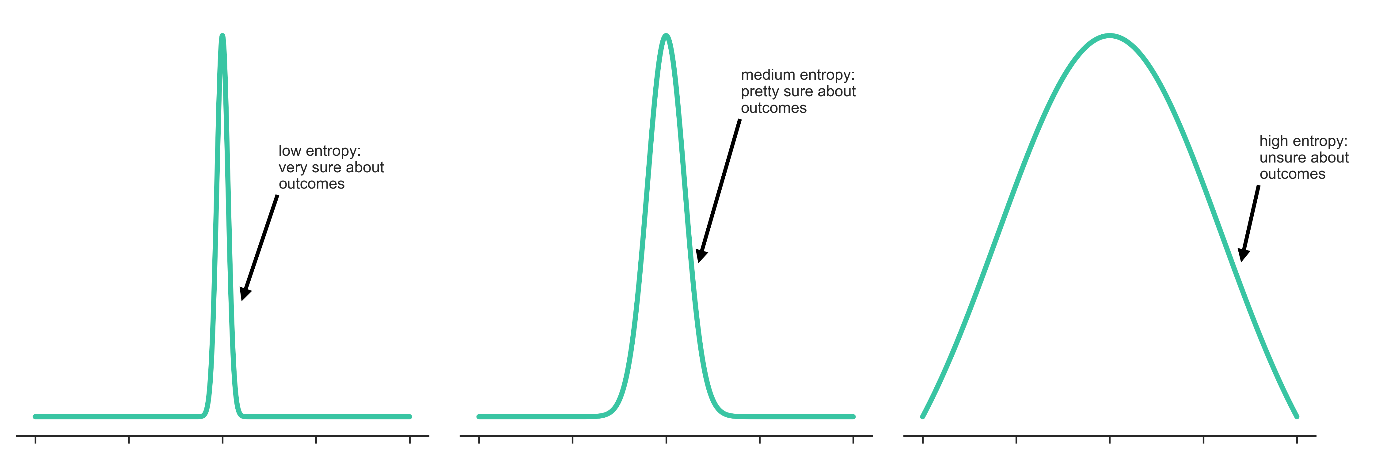

We can generalize this in the probability distributions below. If we're sure about the outcomes, we'd say entropy is low. If we're unsure, entropy is high.

Coming back to our Wordle example, the amount of entropy is how many words are left. That's how much uncertainty remains.

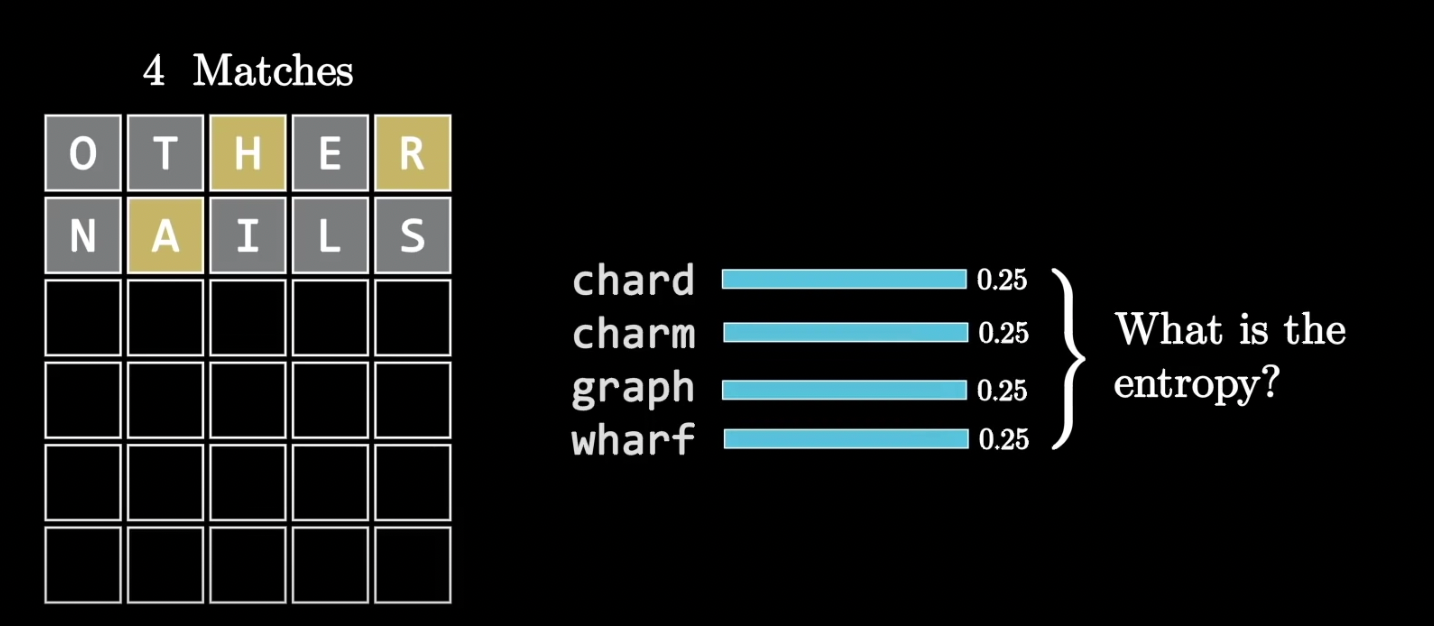

So in the example below, we've guessed "other" and "nails." There are four words left: chard, charm, graph, and wharf.

How much entropy is left?

Intuitively, we wouldn't expect the entropy to be something large like 6 bits. That would mean there were 64 equally likely options still left. (2^6 = 64)

We also wouldn't expect something too small like 1 bit. That would mean there are 2 equally likely options left. (2^1 = 2) There's more uncertainty left here than a single coin flip.

Indeed, there are 2 bits of entropy here. There are four options left, which is two coin flips left of uncertainty. 2^2 = 4.

To conclude this section:

Shannon's information is a measure of how surprising a given outcome is among all possible outcomes.

While entropy is the amount of uncertainty left in the system.

You receive information when observing the world. What's left is entropy.

Let's now move to the second set of definitions: How do the natural sciences define information and entropy?

II. How Natural Sciences Define Information

For the sciences, let's actually start with entropy instead of information.

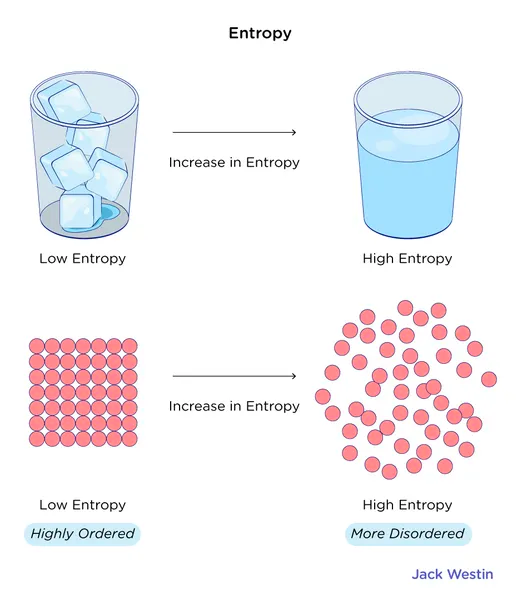

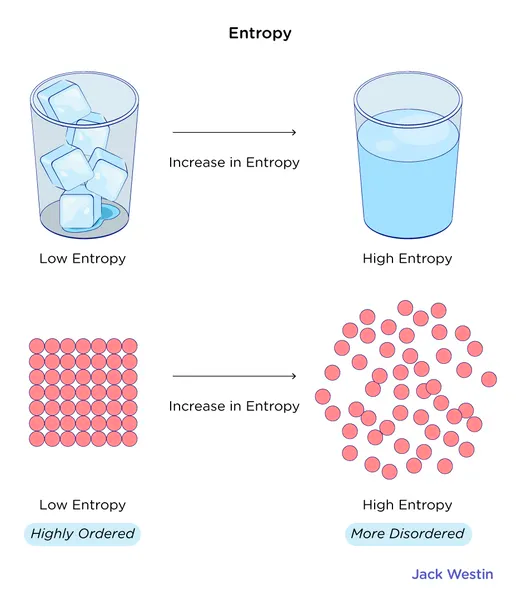

In physics, entropy is a measurement of how disordered a substance, like water, is.

Do you think a glass of ice cubes has more or less entropy than a glass of water? Which one is more disordered?

Counterintuitively, a glass of ice cubes might look disordered, but at the molecular level it's quite ordered. Meanwhile, a glass of water might look ordered, but at the molecular level it's quite disordered.

A glass of ice cubes has low entropy and high information because it's highly ordered.

When it melts into water, it has high entropy and loses information because it's more disordered.

We can think of this like rolling dice.

The ice cube is like rolling all sixes, while the glass of water is like rolling an average roll.

The probability of rolling a 30 with five different dice is quite low. You need all sixes! It has high information and low entropy.

But rolling an 18 with five dice is more likely. You can have 1, 2, 4, 5, 6 like below. Or 1, 2, 3, 6, 6. Or 1, 2, 3, 3, 5, 6. And so on.

There are lots of ways to skin a cat, er, roll an 18.

Rolling six dice makes the probability distribution below. 18 is quite probable while 30 is unlikely.

Now let's play God and roll the H2O particles instead. The odds that you were to roll the particles and they'd line up perfectly as ice cubes is quite low. It's like rolling all sixes.

But the odds that you roll the H2O into the jumbled mess of water is more likely.

This is what physicists mean when they look at one possible microstate (a dice roll) among all possible microstates (all possible dice rolls).

Low entropy microstates give us a lot of information. We didn't expect the dice to be all sixes or the H2O to be in a perfect ice cube, but it was! We were surprised to see it.

High entropy microstates give us little information. We expect the dice to be jumbled around, just like we expect the H2O molecules to be jumbled. We don't get much info because it was expected.

This should look familiar to Shannon's definitions of information and entropy above. In fact, although the physics definitions of entropy are from the late 1800s, we now understand them as a specific manifestation of Shannon's more abstract definition from the 1940s.

There go humans again, moving up the ladder of abstraction. 🙂

To Shannon, we gain a lot of information from the binary string 11111. That was unexpected, just like rolling 66666 is unexpected, and just like a bunch of water molecules looking like this cube is unexpected:

💧💧💧

💧💧💧

💧💧💧

Shannon's information is a measure of how surprising a given outcome is among all possible outcomes.

Physical information is the same: a measure of how surprising a given physical arrangement is among all possible physical arrangements.

Meanwhile, Shannon's entropy is the amount of uncertainty left in the system.

But wait! Lots of uncertainty means lots of entropy. Rolling five dice has lots of uncertainty and lots of entropy.

So how is disorder (not uncertainty) tied to entropy?

The glass of water has more disorder because if you choose a molecule from it, it could be going at a variety of speeds. Although it looks smooth, there's actually lots of sloshing around happening. Some molecules are standing still, some are going really fast. They're all bumping into each other.

Versus with an ice cube, where all the molecules are doing the same thing—just sitting there. You know what you're going to get, a boring still H2O molecule not going too fast.

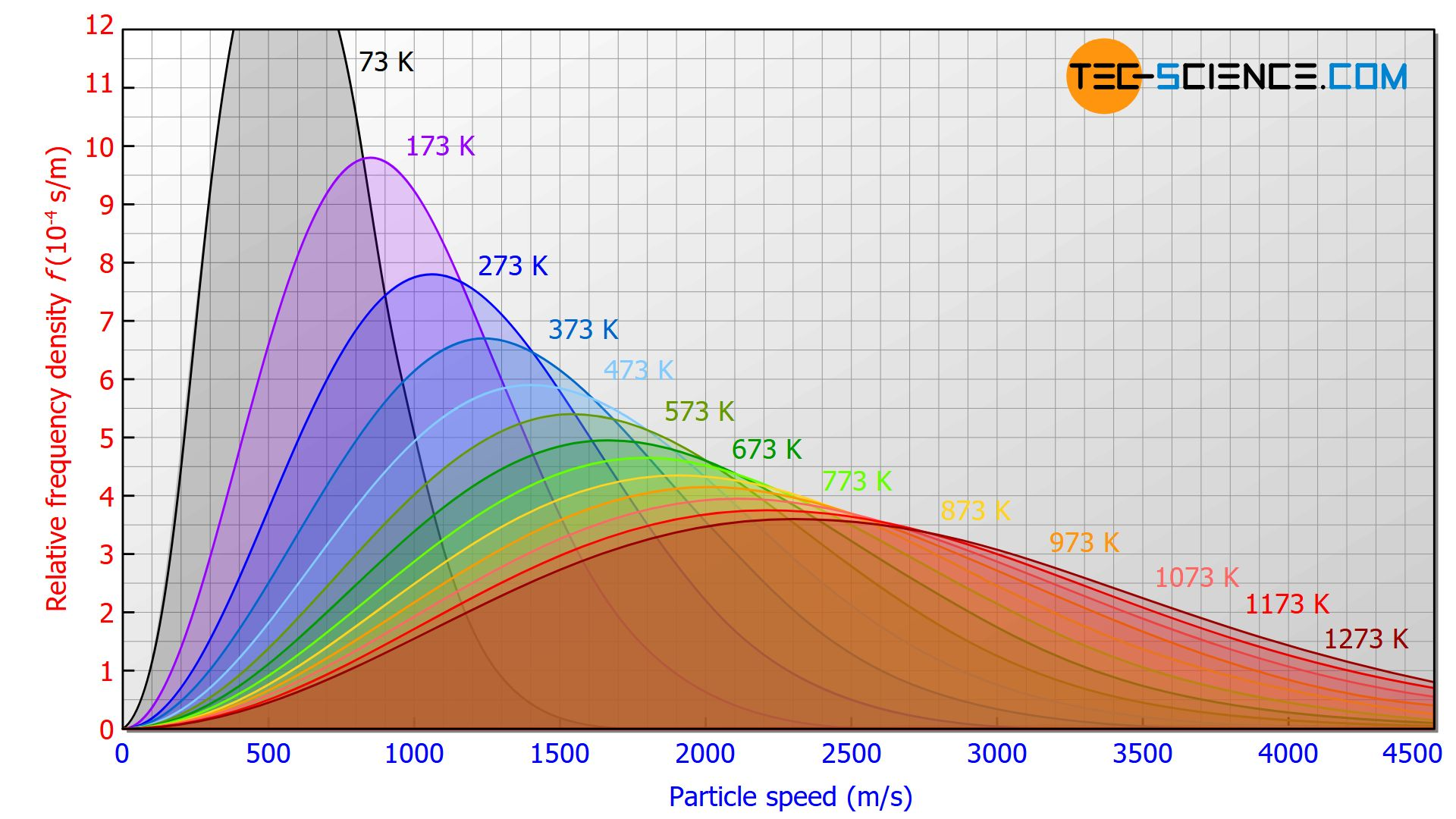

This is mathematically formalized in something called the Boltzmann distribution (below).

If it's really cold (73K, -200 C, the grey distribution below), then most of the particles (of an "ideal gas") are all going the same speed, around 500 m/s.

But it's it's really hot (1273 K, the red color), then distribution is much wider. There are molecules going all kinds of crazy speeds. Fast, slow, and in between.

The wider the distribution, the more disorder, the more uncertainty, the higher the entropy. So:

Shannon's entropy is the amount of uncertainty left in the system.

Physical entropy is the amount of physical uncertainty left in the system.

Now that we understand a general natural sciences definition of entropy and information, let's apply it in a variety of fields: physics, chemistry, biology, and quantum.

A. Information in Physics

Most of the definitions above are taken from physics, specifically from "statistical thermodynamics", which asks the question: What are all the particles doing?

I'd also add this interesting piece: where is information in one of the most important equations, E = mc2 ?

It's not there! There's only energy (E), mass (m), and the speed of light (c).

Very curious indeed.

If you can tell me more about how information relates to these fundamental units, I'd love to know!

B. Information in Chemistry

How does chemistry think of information and (especially) entropy?

Chemistry often looks at the change in entropy (in a chemical reaction), while physics looks at the current state of entropy.

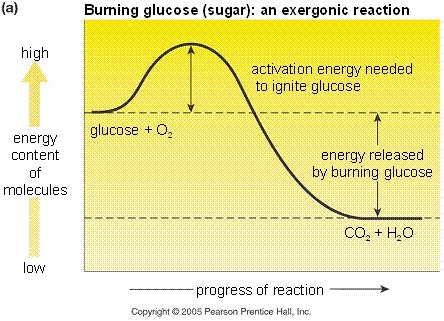

For example we can look at how our body turns glucose sugars into energy for our body. This chemical reaction requires a bit of activation energy to get started, but eventually produces energy, just like a ball turns potential energy into kinetic energy by rolling downhill.

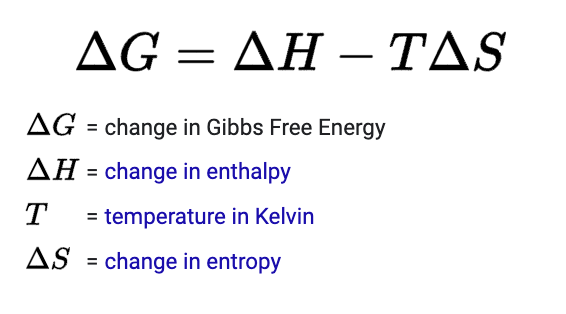

To determine whether a reaction will occur, chemists use an equation called Gibbs Free Energy, which looks at

- How much potential energy exists in the chemical bonds. Enthalpy or ΔH

- How much more disordered the molecules are after the reaction. Entropy or ΔS

A reaction will spontaneously occur if you have lots of potential energy that also increases the amount of entropy/disorder in the system. The universe wants to go in that direction! (As foretold by our friend the 2nd law of thermodynamics.)

C. Information in Biology

Biologists often think of entropy like chemists do: to see if certain reactions will spontaneously occur in our bodies.

But biologists also look at a different form of information: information about something, rather than information as a measure of uncertainty.

The neuroscientist Peter Tse explains it like so:

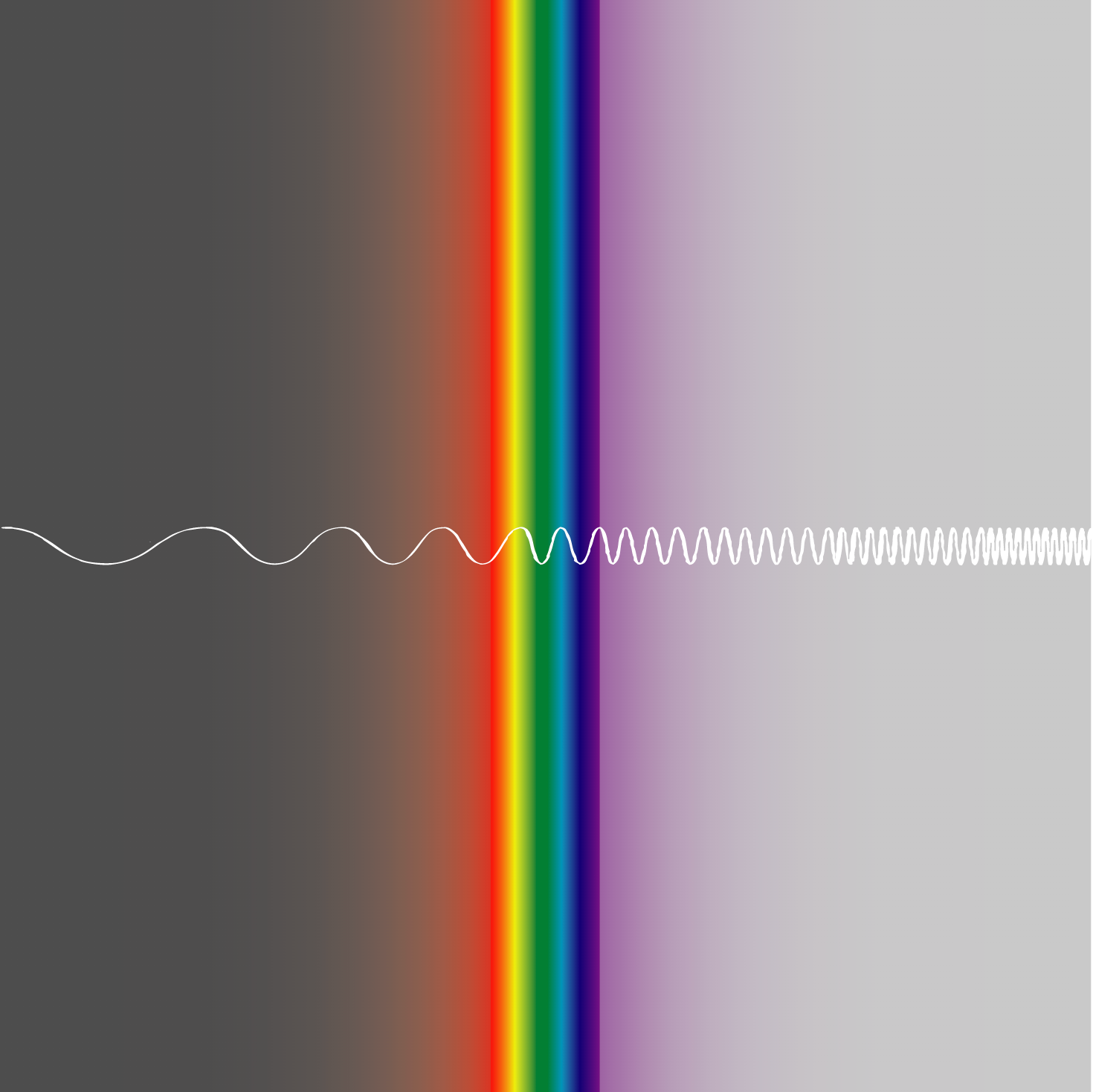

Physics is often concerned with the magnitude and frequency, of, for example, light:

But biologists are concerned with phase, not magnitude or frequency.

Two events can be in-phase with each other or out-of-phase.

For example, when our brain sees a red traffic light, all of the cars stop. Red lights and cars stopping are in phase with each other.

Or when we smell our grandmother's cooking and it triggers a neural cascade that helps us remember a childhood memory of sitting on her couch. That is a phase relationship.

The world is full of patterns (or phases). Biologists are interested in understanding how organisms and brains exploit those patterns.

This is what we mean when we say that organisms contain information about their environment, rather than talking about the inherent nature of how their particles are arranged in a probability distribution.

D. Information in Quantum Stuffs

I don't totally get this, but two notes:

- Von Neumann entropy is the quantum equivalent of Shannon entropy

- There's something special to how quantum physics relates to information. Quantum information is a "thing". Qbits are all about probability distributions. It almost feels like quantum is a version of classical physics that gives the "correct weight" to information as a first-class citizen.

¯\_(ツ)_/¯

Now that we've understood:

I. The information theoretic defintion of information

II. The natural sciences definition of information

Let's now turn towards the common use or information technology definition of information.

III. How Information Technology Defines Information

We now come to our final definition of information. In many ways, this is the one we're most familar with. Information is this text on the internet. It is the cat picture your friend texts you.

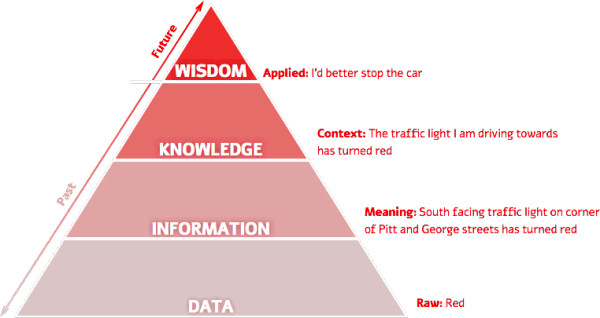

In this context, information will often show up as the DIWK pyramid:

Raw data gets processed as information with meaning, which then turns into contextual knowledge, which is then applied to the future as wisdom.

Because this DIKW definition of information is much more intuitive to us, I will not go deeper into how information scientists (librarian types) define information. (Though of course they do so in many other ways.)

Information is a crucial primitive that determines how society evolves.

In the end, I simply think of information as pattern.

Still, I hope this article has given you an overview of:

- How information theory defines information

- How science (physics, chemistry, biology) defines information

- How popular culture defines information

To learn more, check out my book here or join my Interintellect series.

If you have feedback or clearer definitions of information, please let me know! Thanks.

Notes:

- I didn't cover computation but would like to (see Why Information Grows)